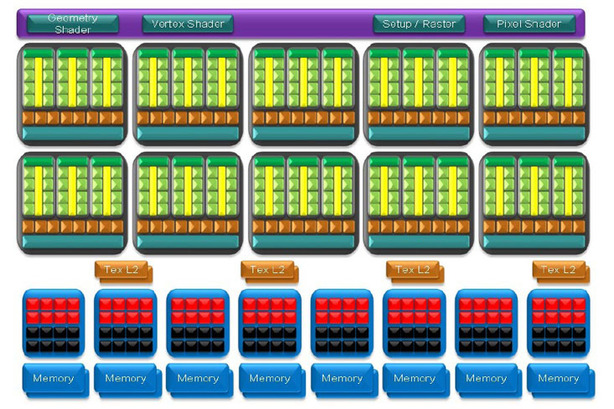

GT200 graphics architecture

As we mentioned earlier, Nvidia’s architectures since the GeForce 8800 GTX have taken on two personalities – one designed for the typical graphics operations we’re used to seeing GPUs ploughing through, and another designed for highly parallelised compute operations.The two architectures are very similar, but there are some distinct differences at the top and bottom ends of the chip – we’ll highlight those here before diving deeper into the shader core and its capabilities.

At the high level, the full-fat version of the GT200 chip (i.e. the GeForce GTX 280) features 240 fully generalised 1D scalar shader processors, 80 texture units and 32 ROPs that back out onto a 512-bit memory interface and a 1GB frame buffer.

There is curiously no support for DirectX 10.1, which is rather interesting given the controversy surrounding Assassin's Creed – the first game to support the updated API. Nvidia said that the feedback it received from the development community was very much in favour of the engineering team focusing their efforts on other aspects of the architecture, integrating new features like GPU-accelerated physics, but I'm not sure I buy that reasoning.

Nvidia's GT200 GPU - graphics flow diagram

In further conversations I held with Tony Tamasi and John Montrym, the chief architect for GT200, it became apparent that Nvidia supports a subset of DirectX 10.0, but doesn't comply fully with the DirectX 10.1 requirements. Since Microsoft has moved away from caps bits to an all or nothing approach to supporting the API, Nvidia can only claim DX10.0 compliancy. When I asked Tamasi what D3D10.1 features GT200 didn't support, he gave a caged answer.

"Frankly, we'd rather not talk about what's not supported for a very simple reason," said Tamasi. "The red team will go out and try to get every ISV to implement things that aren't supported for competitive reasons. That really isn't good for game developers, Microsoft and also for us too. So I'd rather not say what [DX10.1] features we don't support."

The natural follow up to that was to ask what D3D10.1 features Nvidia does support, but neither were keen to discuss that in great detail – I guess both were worried that ATI would use process of elimination to work out what features it didn't support if it started going into too much detail. "I can tell you that one thing we support for sure is reading from the multisample depth buffer [with deferred rendering], which right now seems to be the thing that people are finding interesting in 10.1. And so for the ISVs that are doing that, we're supporting them directly [and exposing the feature to them]," Tamasi added.

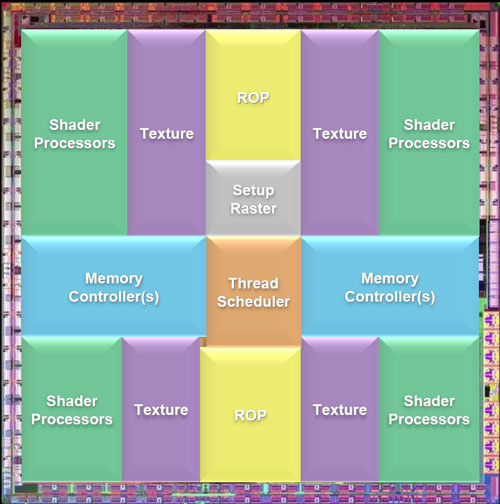

GT200's chip layout

I found this collection of responses rather disconcerting and it certainly doesn't put my mind at rest over the whole Assassin's Creed debacle. Developers are lazy, and they want to achieve certain things with a minimal amount of effort – clean code equals fast code, generally speaking. DirectX 10.1 was created with the idea of making things easier for developers – some things were fixed, and other capabilities allowed developers to achieve certain effects with less code.

Nvidia's decision not to support the API now makes developers' lives harder, as they now start questioning the ROI on implementing a DX10.1 path. That's a shame, if you ask me, because Nvidia will have to support DirectX 10.1 at some point – in order to achieve D3D11 compliancy, it'll have to fully support both 10.0 and 10.1. And what's more, the accusations it is throwing into the cauldron are almost completely incomprehensible – AMD doesn't have the cash to buy developers off on a large scale and, having talked about its developer relations program at great length, I'm not sure that is what AMD wants to do anyway.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.